The Best Data Anonymization Tools For Privacy Protection Compliance

Organizations use data anonymization tools to remove personally identifiable information from their datasets. Non-compliance can lead to hefty fines from regulatory bodies and data breaches. Without anonymizing data, you can not utilize or share the datasets to the fullest.

Many anonymization tools can’t guarantee full compliance. Past-gen methods might leave personal information vulnerable to de-identification by malicious actors. Some statistical anonymization methods reduce the dataset quality to a point when it’s unreliable for data analytics.

We at Syntho will introduce you to the anonymization methods and the key differences between past-gen and next-gen tools. We’ll tell you about the best data anonymization tools and suggest the key considerations for choosing them.

Table of Contents

What are data anonymization tools?

Data anonymization is the technique of removing or altering confidential information in datasets. Organizations can’t freely access, share, and utilize available data that can be directly or indirectly traced to individuals.

- General Data Protection Regulation (GDPR). The EU legislation protects personal data privacy, mandating consent for data processing and granting individuals data access rights. The United Kingdom has a similar law called UK-GDPR.

- California Consumer Privacy Act (CCPA). Californian privacy law focuses on consumer rights regarding data sharing.

- Health Insurance Portability and Accountability Act (HIPAA). The Privacy Rule establishes standards for protecting patient’s health information.

How do data anonymization tools work?

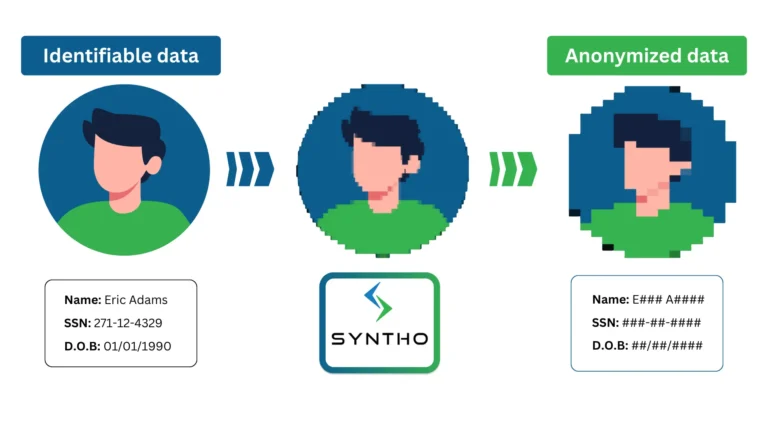

Data anonymization tools scan datasets for sensitive information and replace them with artificial data. The software finds such data in tables and columns, text files, and scanned documents.

This process strips data of elements that can link it to individuals or organizations. The types of data obscured by these tools include:

- Personally identifiable information (PII): Names, identification numbers, birth dates, billing details, phone numbers, and email addresses.

- Protected health information (PHI): Covers medical records, health insurance details, and personal health data.

- Financial information: Credit card numbers, bank account details, investment data, and others that can be linked to corporate entities.

For example, healthcare organizations anonymize patient addresses and contact details to ensure HIPAA compliance for cancer research. A finance company obscured transaction dates and locations in their datasets to adhere to GDPR laws.

While the concept is the same, several distinct techniques exist for anonymizing data.

Data anonymization techniques

Anonymization happens in many ways, and not all methods are equally reliable for compliance and utility. This section describes the difference between the different types of methods.

Pseudonymization is a reversible de-identification process where personal identifiers are replaced with pseudonyms. It maintains a mapping between the original data and the altered one, with the mapping table stored separately.

The downside of pseudonymizing is that it’s reversible. With additional information, the malicious actors can trace it back to the individual. Under GDPR’s rules, pseudonymized data isn’t considered anonymized data. It remains subject to data protection regulations.

Data masking

The data masking method creates a structurally similar but fake version of their data to protect sensitive information. This technique replaces real data with altered characters, keeping the same format for normal use. In theory, this helps maintain the operational functionality of datasets.

In practice, masking data often reduces the data utility. It may fail to preserve the original data‘s distribution or characteristics, making it less useful for analysis. Another challenge is deciding what to mask. If done incorrectly, masked data can still be re-identified.

Generalization (aggregation)

Generalization anonymizes data by making it less detailed. It groups similar data together and diminishes its quality, making it harder to tell individual pieces of data apart. This method often involves data summarization methods like averaging or totalizing to protect individual data points.

Over-generalization can make data almost useless, while under-generalization may not offer enough privacy. There’s also a risk of residual disclosure, as aggregated datasets might still provide enough detail de-identification when combined with other data sources.

Perturbation

Perturbation modifies the original datasets by rounding up values and adding random noise. The data points are changed subtly, disrupting their original state while maintaining overall data patterns.

The downside of perturbation is that data is not fully anonymized. If the changes are not sufficient, there’s a risk that the original characteristics can be re-identified.

Data swapping

Swapping is a technique where attribute values in a dataset are rearranged. This method is particularly easy to implement. The final datasets don’t correspond to the original records and are not directly traceable to their original sources.

Indirectly, however, the datasets remain reversible. Swapped data is vulnerable to disclosure even with limited secondary sources. Besides, it’s hard to maintain the semantic integrity of some switched data. For instance, when replacing names in a database, the system might fail to distinguish between male and female names.

Tokenization

Tokenization replaces sensitive data elements with tokens — non-sensitive equivalents without exploitable values. The tokenized information is usually a random string of numbers and characters. This technique is often used to safeguard financial information while maintaining its functional properties.

Some software makes it harder to manage and scale token vaults. This system also introduces a security risk: sensitive data could be at risk if an attacker gets through the encryption vault.

Randomization

Randomization alters values with random and mock data. It is a straightforward approach that helps preserve the confidentiality of individual data entries.

This technique doesn’t work if you want to maintain the exact statistical distribution. It’s guaranteed to compromise data utilized for complex datasets, like geospatial or temporal data. Inadequate or improperly applied randomization methods can’t ensure privacy protection, either.

Data redaction

Data redaction is the process of entirely removing information from datasets: blacking out, blanking, or erasing text and images. This prevents access to sensitive production data and is a common practice in legal and official documents. It’s just as obvious that it makes the data unfit for accurate statistical analytics, model learning, and clinical research.

As evident, these techniques have flaws that leave loopholes that malicious actors can abuse. They often remove essential elements from datasets, which limits their usability. This isn’t the case with the last-gen techniques.

Next-generation anonymization tools

Modern anonymization software employs sophisticated techniques to negate the risk of re-identification. They offer ways to comply with all privacy regulations while maintaining the structural quality of data.

Synthetic data generation

Synthetic data generation offers a smarter approach to anonymizing data while maintaining data utility. This technique uses algorithms to create new datasets that mirror real data’s structure and properties.

Synthetic data replaces PII and PHI with mock data that can’t be traced to individuals. This ensures compliance with data privacy laws, such as GDPR and HIPAA. By adopting synthetic data generation tools, organizations ensure data privacy, mitigate risks of data breaches, and accelerate the development of data-driven applications.

Homomorphic encryption

Homomorphic encryption (translates as the “same structure”) transforms data into ciphertext. The encrypted datasets retain the same structure as the original data, resulting in excellent accuracy for testing.

This method allows performing complex computations directly on the encrypted data without needing to decrypt it first. Organizations can securely store encrypted files in the public cloud and outsource data processing to third parties without compromising security. This data is also compliant, as privacy rules don’t apply to encrypted information.

However, complex algorithms require expertise for correct implementation. Besides, homomorphic encryption is slower than operations on unencrypted data. It may not be the optimal solution for DevOps and Quality Assurance (QA) teams, who require quick access to data for testing.

Secure multiparty computation

Secure multiparty computation (SMPC) is a cryptographic method of generating datasets with a joint effort of several members. Each party encrypts their input, performs computations, and gets processed data. This way, every member gets the result they need while keeping their own data secret.

This method requires multiple parties to decrypt the produced datasets, which makes it extra confidential. However, the SMPC requires significant time to generate results.

| Previous-generation data anonymization techniques | Next-generation anonymization tools | ||||

|---|---|---|---|---|---|

| Pseudonymization | Replaces personal identifiers with pseudonyms while maintaining a separate mapping table. |

|

Synthetic data generation | Uses an algorithm to create new datasets that mirror real data’s structure while ensuring privacy and compliance. |

|

| Data masking | Alters real data with fake characters, keeping the same format. |

|

Homomorphic encryption | Transforms data into ciphertext while retaining the original structure, allowing computation on encrypted data without decryption. |

|

| Generalization (aggregation) | Reduces data detail, grouping similar data. |

|

Secure multiparty computation | Cryptographic method where multiple parties encrypt their input, perform computations, and achieve joint results. |

|

| Perturbation | Modifies datasets by rounding values and adding random noise. |

|

|||

| Data swapping | Rearranges dataset attribute values to prevent direct traceability. |

|

|||

| Tokenization | Substitutes sensitive data with non-sensitive tokens. |

|

|||

| Randomization | Adds random or mock data to alter values. |

|

|||

| Data redaction | Removes information from datasets, |

|

|||

Table 1. The comparison between Previous- and next-generation anonymization techniques

Smart data de-identification as a new approach to data anonymization

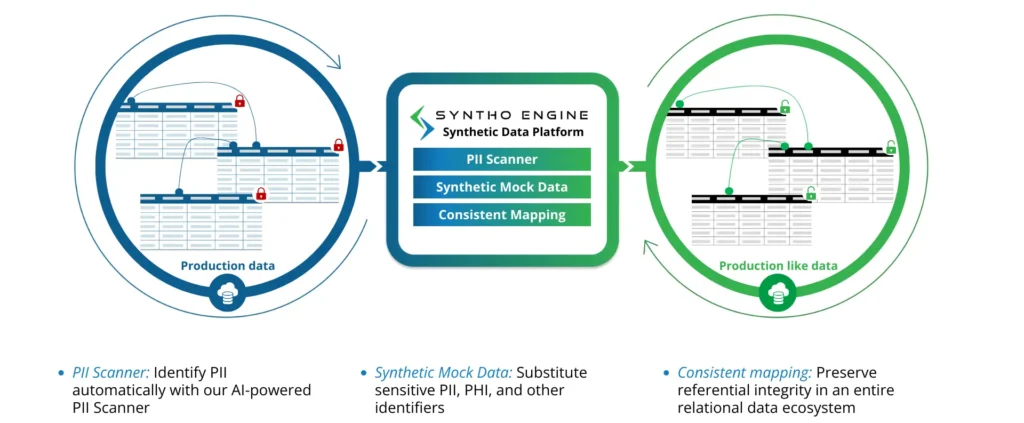

Smart de-identification anonymizes data using AI-generated synthetic mock data. Platforms with features transform sensitive information into compliant, no-identifiable data in the following ways:

- De-identification software analyzes the existing datasets and identifies PII and PHI.

- Organizations can select which sensitive data to replace with artificial information.

- The tool produces new datasets with compliant data.

This technology is useful when organizations need to collaborate and exchange valuable data securely. It’s also useful when data needs to be made compliant in several relational databases.

Smart de-identification keeps the relationships within the data intact through consistent mapping. Companies can use the generated data for in-depth business analytics, machine learning training, and clinical tests.

With so many methods, you need a way to determine if the anonymization tool is right for you.

How to choose the right data anonymization tool

- Operational scalability. Choose a tool capable of scaling up and down in accordance with your operational demands. Take time to stress test the operational efficiency under increased workloads.

- Integration. Data anonymization tools should smoothly integrate with your existing systems and analytical software, as well as the continuous integration and continuous deployment (CI/CD) pipeline. Compatibility with your data storage, encryption, and processing platforms is vital for seamless operations.

- Consistent data mapping. Make sure the anonymized data preservers have integrity and statistical accuracy that are appropriate for your needs. Previous-generation anonymization techniques erase valuable elements from datasets. Modern tools, however, maintain referential integrity, making the data accurate enough for advanced use cases.

- Security mechanisms. Prioritize tools that protect real datasets and anonymized results against internal and external threats. The software must be deployed in a secure customer infrastructure, role-based access controls, and two-factor authentication APIs.

- Compliant infrastructure. Ensure the tool stores the datasets in secure storage that complies with GDPR, HIPAA, and CCPA regulations. In addition, it should support data backup and recovery tools to avoid the possibility of downtime due to unexpected errors.

- Payment model. Consider immediate and long-term costs to understand whether the tool aligns with your budget. Some tools are designed for larger enterprises and medium-sized businesses, while others have flexible models and usage-based plans.

- Technical support. Evaluate the quality and availability of customer and technical support. A provider might help you integrate the data anonymization tools, train the staff, and address technical issues.

The 7 best data anonymization tools

Now that you know what to look for, let’s explore what we believe are the most reliable tools to mask sensitive information.

1. Syntho

Syntho is powered by synthetic data generation software that provides opportunities for smart de-identification. The platform’s rule-based data creation brings versatility, enabling organizations to craft data according to their needs.

An AI-powered scanner identifies all PII and PHI across datasets, systems, and platforms. Organizations can choose which data to remove or mock to comply with regulatory standards. Meanwhile, the subsetting feature helps make smaller datasets for testing, reducing the burden on storage and processing resources.

The platform is useful in various sectors, including healthcare, supply chain management, and finance. Organizations use the Syntho platform to create non-production and develop custom testing scenarios.

You may learn more about Syntho’s capabilities by scheduling a demo.

2. K2view

3. Broadcom

4. Mostly AI

5. ARX

6. Amnesia

7. Tonic.ai

Data anonymization tools use cases

Companies in finance, healthcare, advertising, and public service use anonymization tools to stay compliant with data privacy laws. The de-identified datasets are used for various scenarios.

Software development and testing

Anonymization tools enable software engineers, testers, and QA professionals to work with realistic datasets without exposing PII. Advanced tools help teams self-provision the necessary data that mimics real-world testing conditions without compliance issues. This helps organizations improve their software development efficiency and software quality.

Real cases:

- Syntho’s software created anonymized test data that preserves the statistical values of real data, enabling developers to try different scenarios at a greater pace.

- Google’s BigQuery warehouse offers a dataset anonymization feature to help organizations share data with suppliers without breaking privacy regulations.

Clinical research

Medical researchers, especially in the pharmaceutical industry, anonymize data to preserve privacy for their studies. Researchers can analyze trends, patient demographics, and treatment outcomes, contributing to medical advancements without risking patient confidentiality.

Real cases:

- Erasmus Medical Center uses Syntho’s anonymized AI-generation tools to generate and share high-quality datasets for medical research.

Fraud prevention

In fraud prevention, anonymization tools allow for secure analysis of transactional data, identifying malicious patterns. De-identification tools also allow to training the AI software on real data to improve fraud and risk detection.

Real cases:

- Brighterion trained on Mastercard’s anonymized transaction data to enrich its AI model, improving fraud detection rates while reducing false positives.

Customer marketing

Data anonymization techniques help assess customer preferences. Organizations share de-identified behavioral datasets with their business partners to refine targeted marketing strategies and personalize user experience.

Real cases:

- Syntho’s data anonymization platform accurately predicted customer churn using synthetic data generated from a dataset of over 56,000 customers with 128 columns.

Public data publishing

Agencies and governmental bodies use data anonymization to share and process public information transparently for various public initiatives. They include crime predictions based on data from social networks and criminal records, urban planning based on demographics and public transport routes, or healthcare needs across regions based on disease patterns.

Real cases:

- Indiana University used anonymized smartphone data from about 10,000 police officers across 21 US cities to reveal neighborhood patrol discrepancies based on socioeconomic factors.

These are just a few examples we choose. The anonymization software is used across all industries as a means to make the most of available data.

Choose the best data anonymization tools

All companies use database anonymization software to comply with privacy regulations. When stripped from personal information, datasets can be utilized and shared without risks of fines or bureaucratic processes.

Older anonymization methods like data swapping, masking, and redaction aren’t secure enough. Data de-identification remains a possibility, which makes it non-compliant or risky. In addition, past-gen anonymizer software often degrades the quality of data, especially in large datasets. Organizations can’t rely on such data for advanced analytics.

You should opt for the best data anonymization software. Many businesses choose the Syntho platform for its top-grade PII identification, masking, and synthetic data generation capabilities.

Are you interested to learn more? Feel free to explore our product documentation or contact us for a demonstration.

About the author

Business Development Manager

Uliana Matyashovksa, a Business Development Executive at Syntho, with international experience in software development and the SaaS industry, holds a master’s degree in Digital Business and Innovation, from VU Amsterdam.

Over the past five years, Uliana has demonstrated a steadfast commitment to exploring AI capabilities and providing strategic business consultancy for AI project implementation.

Save your synthetic data guide now!

- What is synthetic data?

- Why do organizations use it?

- Value adding synthetic data client cases

- How to start