Guide to Synthetic Data Generation: Definition, Types, & Applications

It’s no secret that businesses face challenges in acquiring and sharing high-quality data. Synthetic data generation is a practical solution that helps produce large artificial datasets and high-quality test data without privacy risks or red tape.

Synthetic datasets can be created using a variety of methods, offering diverse applications. When properly evaluated, synthetic datasets generated using advanced algorithms help organizations speed up their analytics, research, and testing. So let’s take a closer look.

This article introduces you to synthetic data, including the main types, differences from anonymized datasets, and regulatory nuances. You’ll learn how artificially generated data solves critical data problems and minimizes certain risks. We’ll also discuss its applications across industries, accompanied by examples from our case studies.

Table of Contents

Synthetic data: definition and market statistics

Synthetic data is artificially generated information devoid of confidential content, and it serves as an alternative to real datasets. Data scientists often call AI-generated synthetic data a synthetic data twin because of its high statistical accuracy in mimicking real data.

Artificial datasets are created using artificial intelligence (AI) algorithms and simulations that maintain the patterns and correlations of the original data. This data can include text, tables, and pictures. The algorithms replace personally identifiable information (PII) with mock data.

Do privacy laws regulate AI-generated synthetic data?

Key challenges of using real data

Many companies have a hard time finding and using relevant, high-quality data, especially in sufficient amounts for AI algorithm training. Even when they do find it, sharing or utilizing the datasets can be challenging due to privacy risks and compatibility issues. This section outlines the key challenges synthetic data can solve.

Privacy risks hinder data usage and sharing

Data security and privacy regulations, such as GDPR and HIPAA, introduce bureaucratic obstacles to data sharing and utilization. In industries like healthcare, even sharing PII between departments within the same organization can be time-consuming due to governance checks. Sharing data with external entities is even more challenging and carries more security risks.

Research from Fortune Business Insights identifies rising privacy risks as a primary catalyst for adopting synthetic data practices. The more data you store, the more you risk compromising privacy. According to the 2023 IBM Security Cost of a Data Breach Report, the average data breach cost in the US was $9.48 million. Worldwide, the average cost was $4.45 million; companies with less than 500 workers lose $3.31 million per breach. And that doesn’t account for reputational damage.

Difficulties finding high-quality data

Dataset incompatibilities

Datasets sourced from various origins or within multi-table databases can introduce incompatibilities, creating complexities in data processing and analysis and hindering innovation.

For instance, data aggregation in healthcare involves electronic health records (EHRs), wearables, proprietary software, and third-party tools. Each source may utilize distinct data formats and information systems, leading to disparities in data formats, structures, or units during integration. The use of synthetic data can address this challenge, ensuring compatibility and allowing to generate data in the desired format.

Anonymization is insufficient

Types of synthetic data generation

Synthetic data creation processes vary based on the type of data required. Synthetic data types include fully AI-generated, rule-based, and mock data — each meeting a different need.

Fully AI-generated synthetic data

This type of synthetic data is built from scratch using ML algorithms. The machine learning model trains on actual data to learn about the data’s structure, patterns, and relationships. Generative AI then uses this knowledge to generate new data that closely resembles the original’s statistical properties (again, while making it unidentifiable).

This type of fully synthetic data is useful for AI model training and is good enough to be used as if it is real data. It’s especially beneficial when you cannot share your datasets due to contractual privacy agreements. However, to generate synthetic data, you need a significant amount of original data as a starting point for machine learning model training.

Synthetic mock data

This synthetic data type refers to artificially created data that imitates the structure and format of real data but doesn’t necessarily reflect actual information. It helps developers ensure their applications can handle various inputs and scenarios without using genuine, private, or sensitive data and, most importantly, without relying on real-world data. This practice is essential for testing functionality and refining software applications in a controlled and secure manner.

When to use it: To replace direct identifiers (PII) or when you currently lack data and prefer not to invest time and energy in defining rules. Developers commonly employ mock data to evaluate the functionality and appearance of applications during the early stages of development, allowing them to identify potential issues or design flaws.

Even though mock data lacks the authenticity of real-world information, it remains a valuable tool for ensuring systems’ proper functioning and visual representation before actual data integration.

Note: Synthetic mocked data is often referred to as ‘fake data,’ although we don’t recommend using these terms interchangeably as they may differ in connotations.

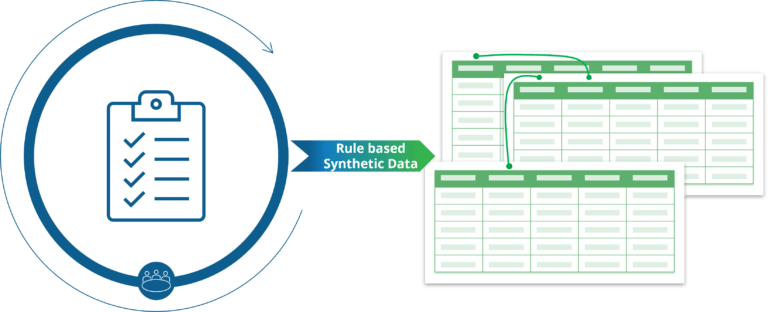

Rule-based synthetic data

Rule-based synthetic data is a useful tool for generating customized datasets based on predefined rules, constraints, and logic. This method provides flexibility by allowing users to configure data output according to specific business needs, adjusting parameters such as minimum, maximum, and average values. In contrast to fully AI-generated data, which lacks customization, rule-based synthetic data offers a tailored solution for meeting distinct operational requirements. This synthetic data generation process proves particularly useful in testing, development, and analytics, where precise and controlled data generation is essential.

Each synthetic data generation method has different applications. Syntho’s platform stands out by creating synthetic data twins with little to no effort on your part. You get statistically accurate, high-quality synthetic data for your needs that’s free of compliance overhead.

Tabular synthetic data

The term tabular synthetic data refers to creating artificial data subsets that mimic the structure and statistical properties of real-world tabular data, such as data stored in tables or spreadsheets. This synthetic data is created using synthetic data generation algorithms and techniques designed to replicate the characteristics of the source data while ensuring that confidential or sensitive data is not disclosed.

Techniques to generate tabular synthetic data typically involve statistical modeling, machine learning models, or generative models such as generative adversarial networks (GANs) and variational autoencoders (VAEs). These synthetic data generation tools analyze the patterns, distributions, and correlations present in the real dataset and then generate new data points that closely resemble real data but do not contain any real information.

Typical tabular synthetic data use cases include addressing privacy concerns, increasing data availability, and facilitating research and innovation in data-driven applications. However, it’s essential to ensure that the synthetic data accurately captures the underlying patterns and distributions of the original data to maintain data utility and validity for downstream tasks.

Most popular synthetic data applications

Artificially generated data opens innovation possibilities for healthcare, retail, manufacturing, finance, and other industries. The primary use cases include data upsampling, analytics, testing, and sharing.

Upsampling to enhance datasets

Upsampling means generating larger datasets from smaller ones for scaling and diversification. This method is applied when real data is scarce, imbalanced, or incomplete.

Consider a few examples. For financial institutions, developers can improve the accuracy of fraud detection models by upsampling rare observations and activity patterns in the financial data. Similarly, a marketing agency might upsample to augment data related to underrepresented groups, enhancing segmentation accuracy.

Advanced analytics with AI-generated data

Companies can leverage AI-generated high-quality synthetic data for data modeling, business analytics, and clinical research. Synthesizing data proves to be a viable alternative when acquiring real datasets is either too expensive or time-consuming.

Synthetic data empowers researchers to conduct in-depth analyses without compromising patient confidentiality. Data scientists and researchers gain access to patient data, information about clinical conditions, and treatment details, obtaining insights that would be considerably more time-consuming with real data. Moreover, manufacturers can freely share data with suppliers, incorporating manipulated GPS and location data to create algorithms for performance testing or enhance predictive maintenance.

However, synthetic data evaluation is critical. The Syntho Engine’s output is validated by an internal quality assurance team and external experts from the SAS Institute. In a study of predictive modeling, we trained four machine learning models on real, anonymized, and synthetic data. Results showed that models trained on our synthetic datasets had the same level of accuracy as those trained on real datasets, while anonymized data reduced the models’ utility.

External and internal data sharing

Synthetic data simplifies data sharing within and across organizations. You can use synthetic data to exchange information without risking privacy breaches or regulatory non-compliance. The benefits of synthetic data include accelerated research outcomes and more effective collaboration.

Retail companies can share insights with suppliers or distributors using synthetic data that reflects customer behavior, inventory levels, or other key metrics. However, to ensure the highest level of data privacy, sensitive customer data, and corporate secrets are kept confidential.

Syntho won the 2023 Global SAS Hackathon for our ability to generate and share accurate synthetic data effectively and risk-free. We synthesized patient data for multiple hospitals with different patient populations to demonstrate the efficacy of predictive models. Using the combined synthetic datasets was shown to be just as accurate as using real data.

Synthetic test data

Synthetic test data is artificially generated data designed to simulate data testing environments for software development. In addition to reducing privacy risks, synthetic test data enables developers to rigorously assess applications’ performance, security, and functionality across a range of potential scenarios without impacting the real system.

Our collaboration with one of the largest Dutch banks showcases synthetic data benefits for software testing. Test data generation with the Syntho Engine resulted in production-like datasets that helped the bank speed up software development and bug detection, leading to faster and more secure software releases.

Techniques to generate tabular synthetic data typically involve statistical modeling, machine learning models, or generative models such as generative adversarial networks (GANs) and variational autoencoders (VAEs). These synthetic data generation tools analyze the patterns, distributions, and correlations present in the real dataset and then generate new data points that closely resemble real data but do not contain any real information.

Typical tabular synthetic data use cases include addressing privacy concerns, increasing data availability, and facilitating research and innovation in data-driven applications. However, it’s essential to ensure that the synthetic data accurately captures the underlying patterns and distributions of the original data to maintain data utility and validity for downstream tasks.

Syntho’s synthetic data generation platform

Syntho provides a smart synthetic data generation platform, empowering organizations to intelligently transform data into a competitive edge. By providing all synthetic data generation methods into one platform, Syntho offers a comprehensive solution for organizations aiming to utilize data that covers:

- AI-generated synthetic data which mimics statistical patterns of original data in synthetic data with the power of artificial intelligence.

- Smart de-identification to protect sensitive data by removing or modifying personally identifiable information (PII).

- Test data management that enables the creation, maintenance, and control of representative test data for non-production environments.

Our platforms integrate into any cloud or on-premises environment. Moreover, we take care of the planning and deployment. Our team will train your employees to use Syntho Engine effectively, and we’ll provide continuous post-deployment support.

You can read more about the capabilities of Syntho’s synthetic data generation platform in the Solutions section of our website.

What’s in the future for synthetic data?

Synthetic data generation with generative AI helps create and share high volumes of relevant data, bypassing format compatibility issues, regulatory constraints, and the risk of data breaches.

Unlike anonymization, generating synthetic data allows for preserving structural relationships in the data. This makes synthetic data suitable for advanced analytics, research and development, diversification, and testing.

The use of synthetic datasets will only expand across industries. Companies are poised to create synthetic data, extending its scope to complex images, audio, and video content. Companies will expand the use of machine learning models to more advanced simulations and applications.

Do you want to learn more practical applications of synthetic data? Feel free to schedule a demo on our website.

About Syntho

Syntho provides a smart synthetic data generation platform, leveraging multiple synthetic data forms and generation methods, empowering organizations to intelligently transform data into a competitive edge. Our AI-generated synthetic data mimics statistical patterns of original data, ensuring accuracy, privacy, and speed, as assessed by external experts like SAS. With smart de-identification features and consistent mapping, sensitive information is protected while preserving referential integrity. Our platform enables the creation, management, and control of test data for non-production environments, utilizing rule-based synthetic data generation methods for targeted scenarios. Additionally, users can generate synthetic data programmatically and obtain realistic test data to develop comprehensive testing and development scenarios with ease.

About the author

CEO & founder

Syntho, the scale-up that is disrupting the data industry with AI-generated synthetic data. Wim Kees has proven with Syntho that he can unlock privacy-sensitive data to make data smarter and faster available so that organizations can realize data-driven innovation. As a result, Wim Kees and Syntho won the prestigious Philips Innovation Award, won the SAS global hackathon in healthcare and life science, and is selected as leading generative AI Scale-Up by NVIDIA.

Save your synthetic data guide now!

- What is synthetic data?

- Why do organizations use it?

- Value adding synthetic data client cases

- How to start